Minglai Yang

I open black boxes to build trustworthy language models.

📧 mingly@arizona.edu

💼 Harvill 454

📍 Tucson, AZ 85721, USA

I am a final year undergraduate student at the Computer Science, University of Arizona (GPA: 4.0/4.0) and am on track to complete my Bachelor’s degree just over 2 years (Fall 2025). As Founder & President of AI Club at UA, I run workshops, host invited speakers, and lead industry collaborations—raising $14K+ to support student AI research and education. In our CS department, I currently serve as both TA (Discrete Math I & II) and RA in CLULAB, IVILAB and ML4AI LAB.

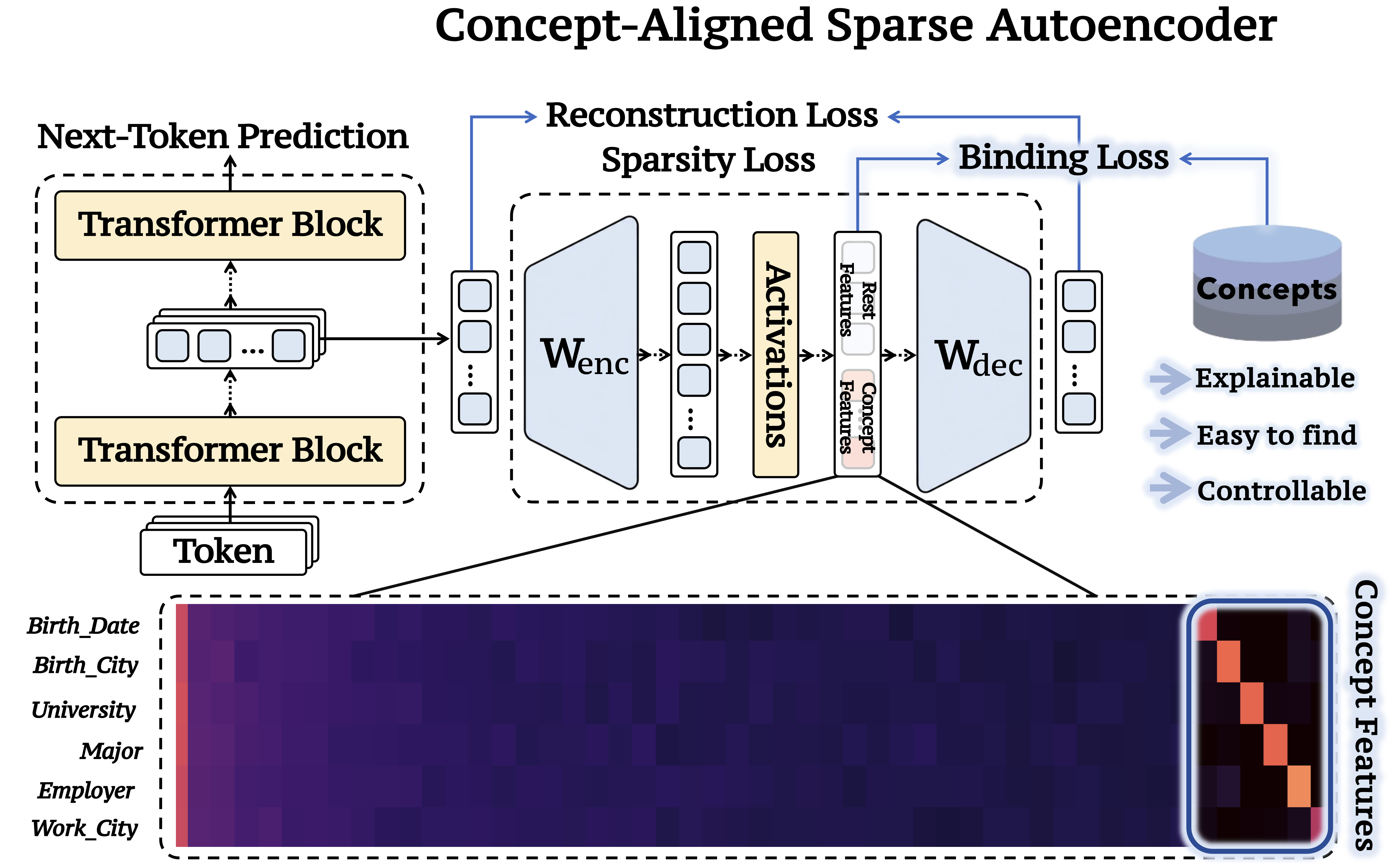

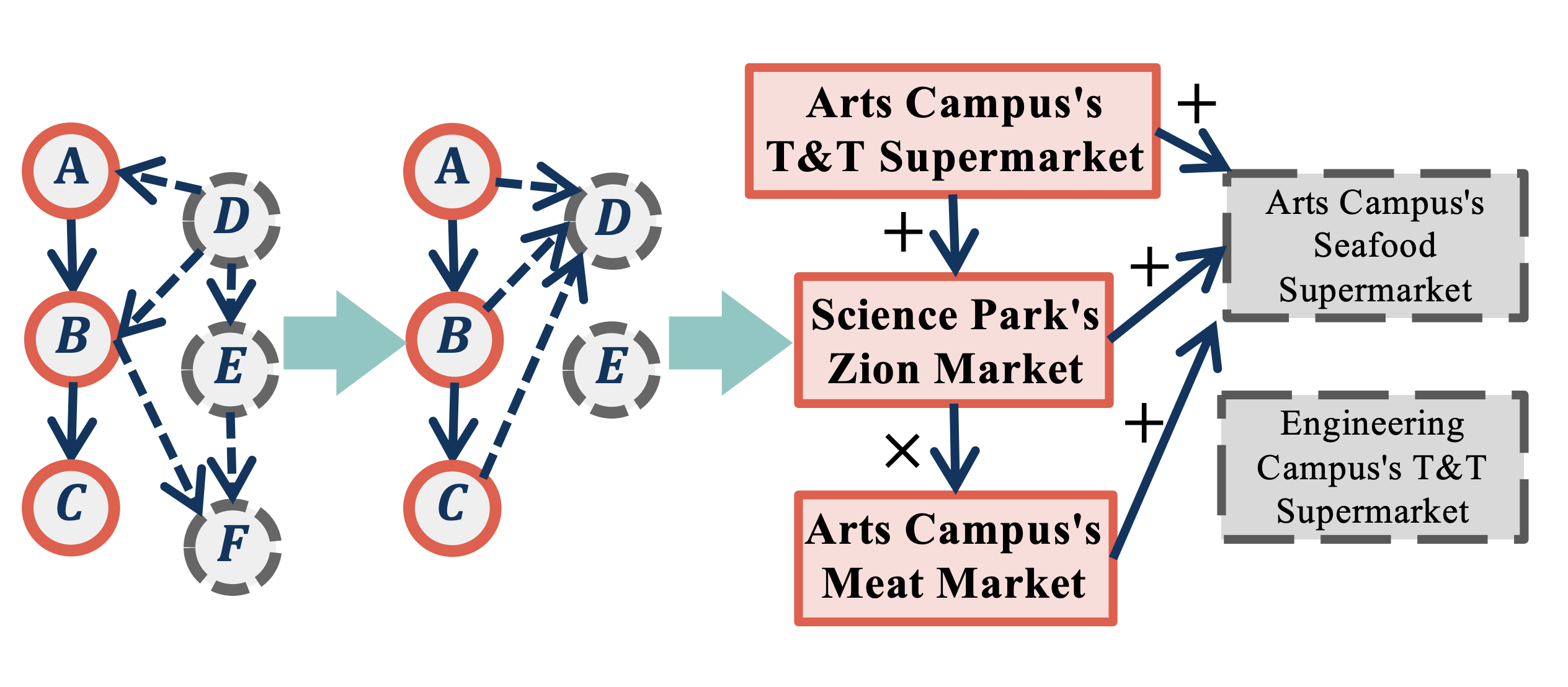

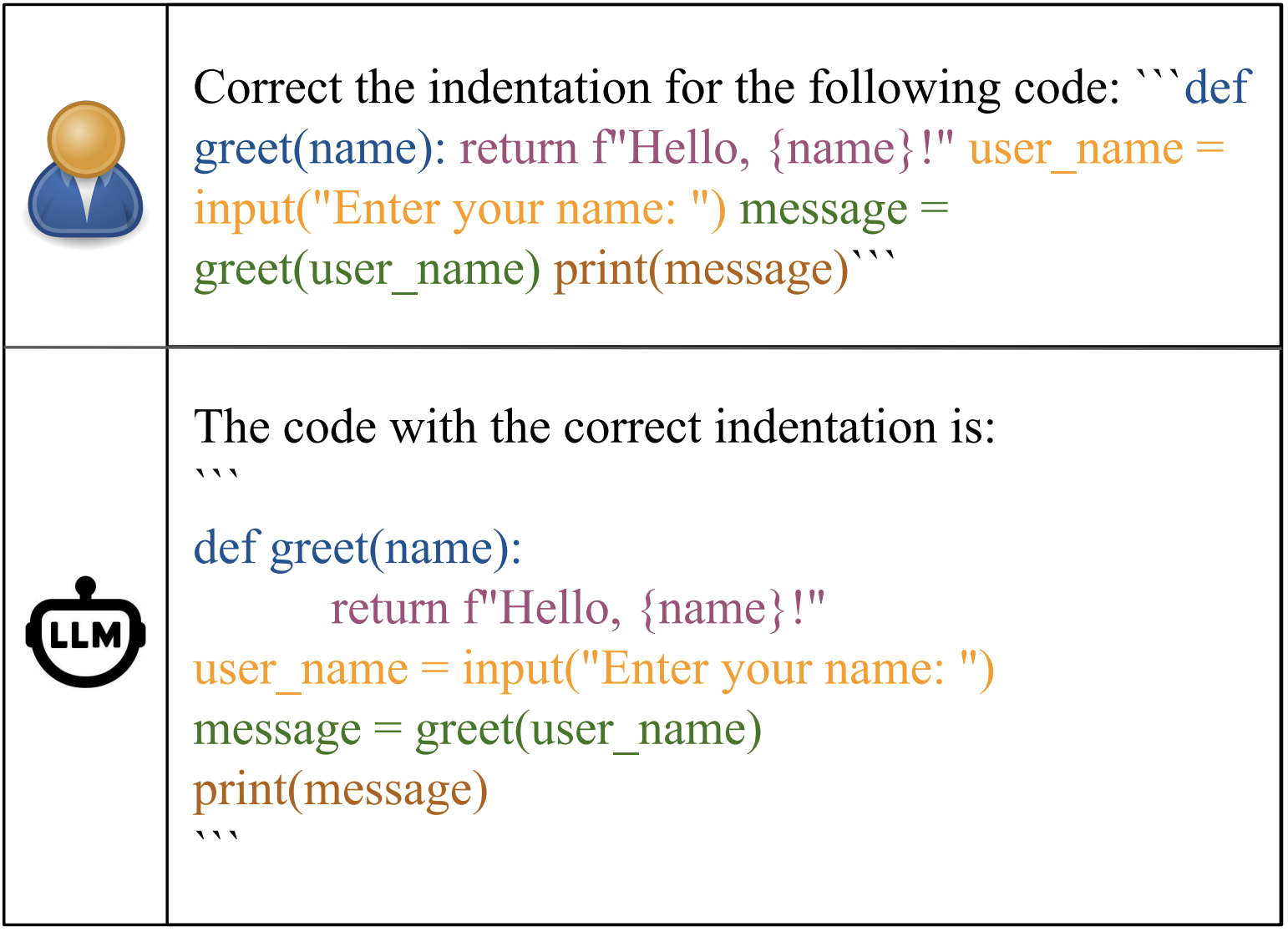

My research focuses on building LLMs that are trustworthy: robust (EMNLP 25), explainable (in submission) and useful (EMNLP25). Ultimately, I’m intersted in these two overarching questions:

- 🔍 Deconstruction of LLMs: How can we open the black box to reveal the internal mechanisms?

- 🛠️ Reconstruction toward Trustworthy LLMs: How do we translate mechanistic insight into models that are robust, explainable, and useful in practice?

I am fortunate to conduct research co-advised by Profs. Mihai Surdeanu, Liangming Pan, Kobus Barnard and Steven Bethard. In addition, I also collaborate with Profs. Adarsh Pyarelal, William Yang Wang and Chicheng Zhang.

I previously worked as a Machine Learning Engineer intern at CoreTechs. This summer, I was a research intern at Knowledge Engineering Group (KEG), Tsinghua University, supervised by Prof. Juanzi Li, working on LLM reasoning mechanisms.

PhD Applications – Fall 2026

I am applying to PhD programs in NLP, interpretability, and reasoning for Fall 2026. My goal is to develop LLMs that reason robustly, efficiently, and transparently. If you’re working in this space, I’d love to connect!

news

| Oct 19, 2025 | We took 2nd place at the Reddit Wildcat Hackathon 2025! |

|---|---|

| Oct 17, 2025 | Honored to earn UA’s Top 10 Undergraduate Research Travel Grant 🎓—headed to my EMNLP oral; see you in Suzhou. ✈️ |

| Aug 20, 2025 | Both of my submissions were accepted to EMNLP 2025 Main (Oral) 🎉 (Acceptance Rate: 22.16%). Grateful to all my co-authors, with special thanks to Profs. Liangming Pan, Mihai Surdeanu and William Wang. |

| Jun 05, 2025 | I will be a research intern at THUKEG, Department of CS in Tsinghua University this summer advised by Prof. Juanzi Li, focusing on reasoning mechanism. |

| May 09, 2025 | Galileo Circle Scholar, University of Arizona — Top 0.8% academic award. |

| Feb 18, 2025 | As President of the AI Club at the University of Arizona, I led the club to raise over $12,000. |

| Dec 03, 2024 | Excited to receive an RAship! I’ll lead a project advised by Liangming Pan and collaborate with William Wang at UCSB NLP Group. |

| Nov 01, 2024 | I’m featured in our department newsletter! Huge thanks to Rishu Singh for the shoutout. |

| Oct 03, 2024 | I’ve joined the Computational Language Understanding Lab (CLULAB)! I’m grateful to be advised by Mihai Surdeanu working on NLP and efficient LLMs. |

| May 09, 2024 | I’m starting a new position as Machine Learning Engineer Intern at CoreTechs! |

| Mar 14, 2024 | I’m starting a new position as AI Engineer in AI Core, the University of Arizona. |